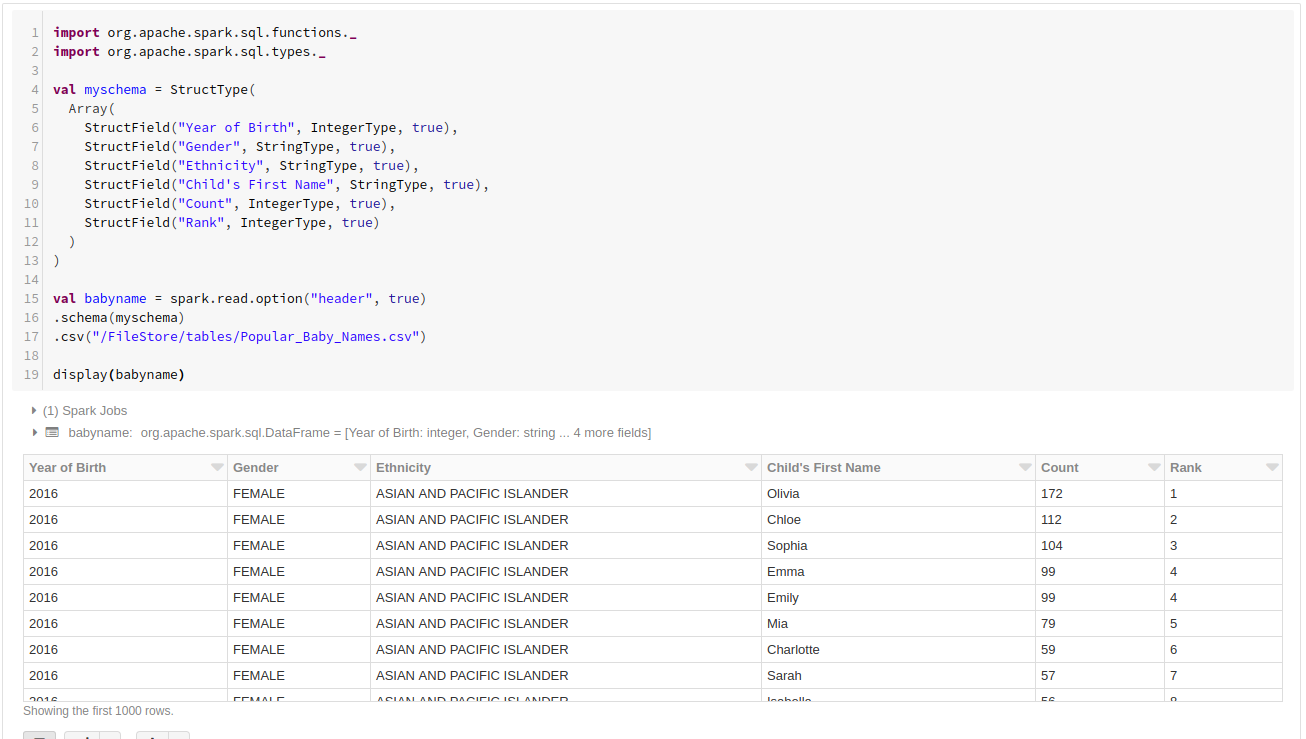

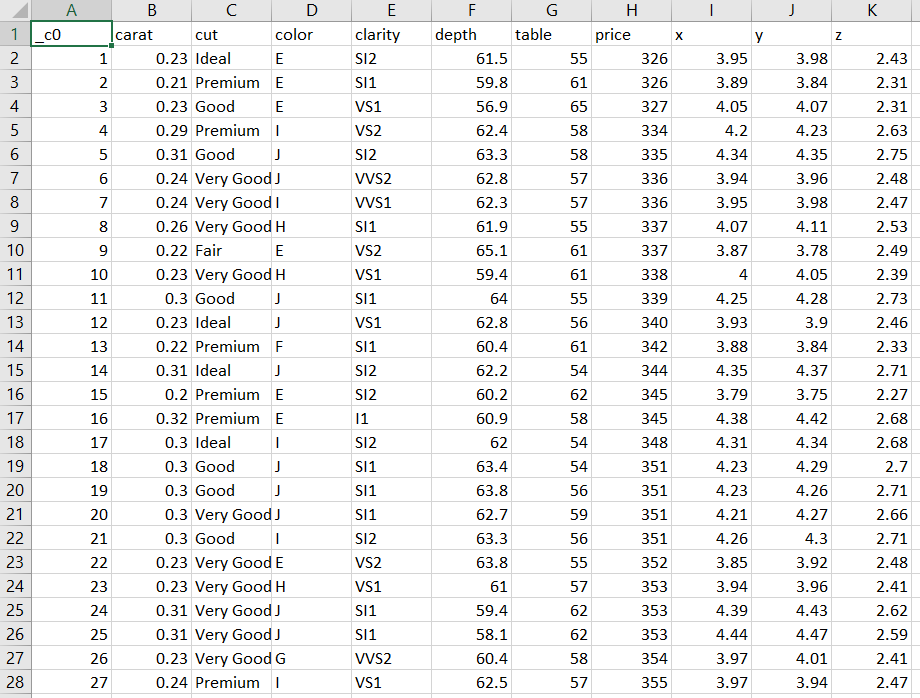

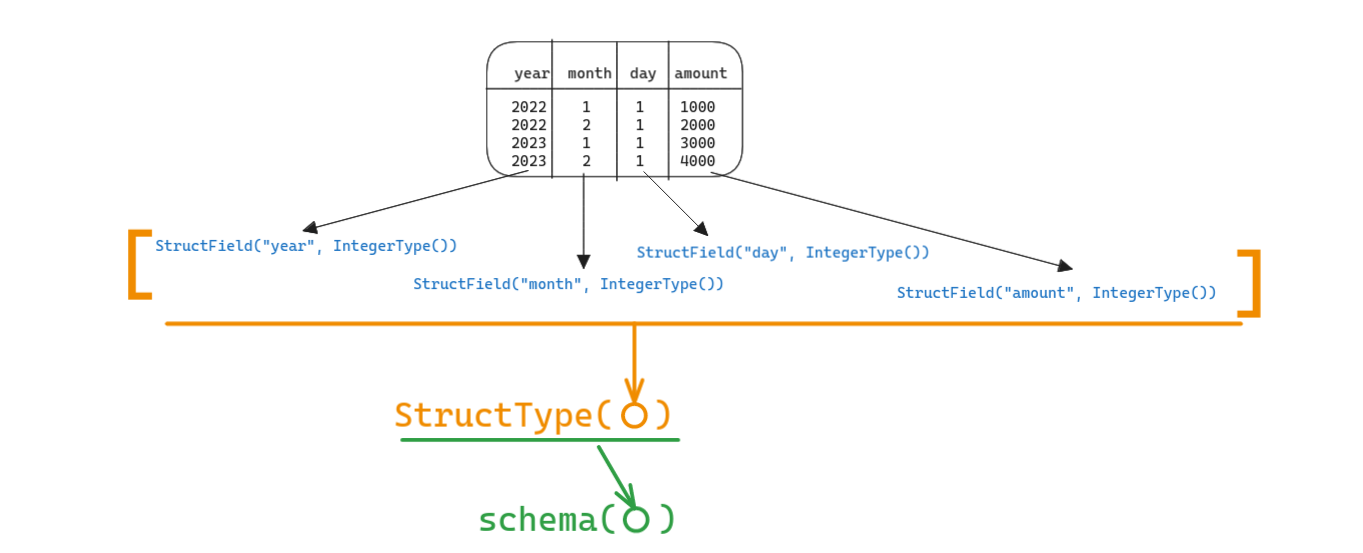

Pyspark Read Csv With Schema - From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. Instead of using the inferschema parameter, we can read csv files with specified schemas. A more practical read command will: In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: In this article, i'm sticking to the.format().load() way of loading csv. Pyspark read csv file with schema.

In this article, i'm sticking to the.format().load() way of loading csv. Instead of using the inferschema parameter, we can read csv files with specified schemas. A more practical read command will: In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: Pyspark read csv file with schema. From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,.

Pyspark read csv file with schema. Instead of using the inferschema parameter, we can read csv files with specified schemas. From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: A more practical read command will: In this article, i'm sticking to the.format().load() way of loading csv.

PySpark Read CSV Muliple Options for Reading and Writing Data Frame

Instead of using the inferschema parameter, we can read csv files with specified schemas. From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. A more practical read command will: In this article, i'm sticking to the.format().load() way of loading csv. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema.

Pysparkreadcsvoptions VERIFIED

Instead of using the inferschema parameter, we can read csv files with specified schemas. A more practical read command will: In this article, i'm sticking to the.format().load() way of loading csv. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: Pyspark read csv file with schema.

Spark Read Multiple CSV Files Spark By {Examples}

In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: Pyspark read csv file with schema. Instead of using the inferschema parameter, we can read csv files with specified schemas. From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. In this article, i'm sticking to the.format().load() way.

6 Ways to Read a CSV file with Numpy in Python Python Pool

In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: Pyspark read csv file with schema. A more practical read command will: Instead of using the inferschema parameter, we can read csv files with specified schemas. In this article, i'm sticking to the.format().load() way of loading csv.

Spark Table Vs Read Csv With Schema Columns In R

In this article, i'm sticking to the.format().load() way of loading csv. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: A more practical read command will: From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. Instead of using the inferschema parameter, we can read csv files.

CSV read, set schema and write with pySpark

Instead of using the inferschema parameter, we can read csv files with specified schemas. Pyspark read csv file with schema. A more practical read command will: From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. In this article, i'm sticking to the.format().load() way of loading csv.

Read CSV Data in Spark Analyticshut

In this article, i'm sticking to the.format().load() way of loading csv. Pyspark read csv file with schema. Instead of using the inferschema parameter, we can read csv files with specified schemas. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: From pyspark.sql.types import * customschema = structtype([ structfield(a,.

apache spark Unable to infer schema for CSV in pyspark Stack Overflow

In this article, i'm sticking to the.format().load() way of loading csv. Instead of using the inferschema parameter, we can read csv files with specified schemas. From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: A more practical.

Automatic Csv Schema Detection In Snowflake Csv Infer Schema Complete

In this article, i'm sticking to the.format().load() way of loading csv. In this example, we have read the csv file (link), i.e., basically a dataset of 5*5, whose schema is as follows: A more practical read command will: From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. Pyspark read csv file with schema.

Spark Table Vs Read Csv With Schema Columns In R

From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. A more practical read command will: Pyspark read csv file with schema. Instead of using the inferschema parameter, we can read csv files with specified schemas. In this article, i'm sticking to the.format().load() way of loading csv.

A More Practical Read Command Will:

Instead of using the inferschema parameter, we can read csv files with specified schemas. From pyspark.sql.types import * customschema = structtype([ structfield(a, stringtype(), true) ,structfield(b,. Pyspark read csv file with schema. In this article, i'm sticking to the.format().load() way of loading csv.